Background:

A new Q&A platform is quickly attracting a large audience. It’s working well, but needs some upgrades to keep pace with its growing customer base and incoming feature requests. One component that requires a review is the question submissions feature.

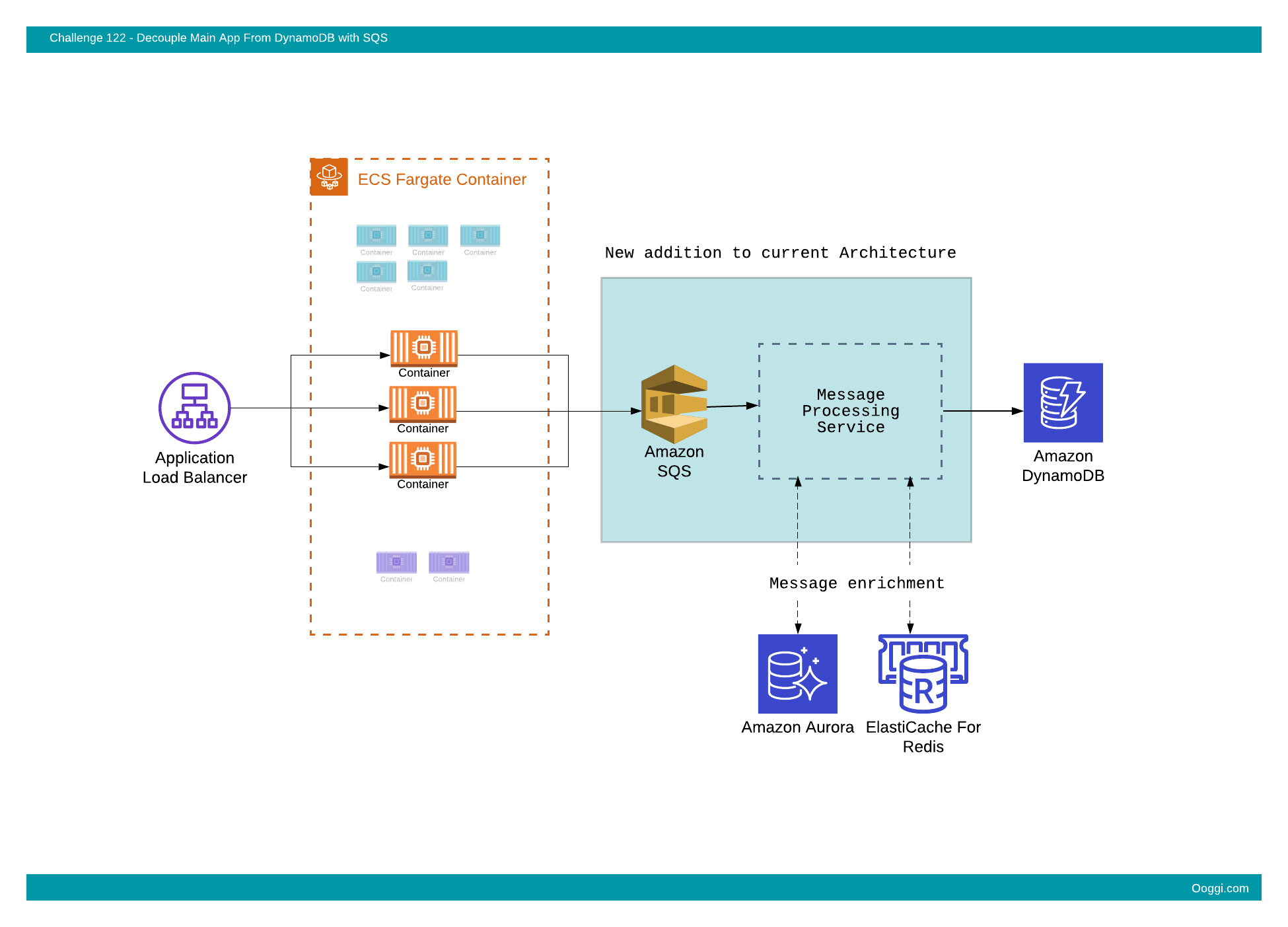

At this time, the Main App is writing newly submitted questions directly to Amazon DynamoDB. Multiple other components are reading data from the DynamoDB table. One component serves the content to website users, another feeds the analytics service, etc.

There are a couple of issues that need to be addressed with the current design.

- New submitted question events now need to be enriched. Additional user content needs to be fetched from a different data store and added to the event. This is beyond the scope of the Main App and needs to be handled externally.

- In the past, a number of misformatted events ended up in DynamoDB causing issues for downstream components. Additional validation controls need to be applied outside of the Main App.

- Another concern is unpredictable bursts in question submissions. A buffer that could deal with high spikes in writes and provide a steady request rate to DynamoDB would be beneficial.

The Cloud Architecture team has reviewed a variety of designs (Kinesis Streams/Apache Kafka, Amazon EventBridge and more).

At this time, it was decided that introducing an SQS queue with a lightweight event processing service between the Main App and DynamoDB would provide a good balance between a straightforward and (hopefully) easy to implement solution and one that addresses the challenges mentioned above.

Bounty:

- Points: 30

- Path: Cloud Engineer

Difficulty:

- Level: 3

- Estimated time: 2-12 hours

Deliverables:

- An SQS queue, a lightweight message processing service and a DynamoDB table, all integrated based on the requirements.

Prototype description:

A standard SQS queue will be used for ingestion of messages. Messages written into the queue will be read by a service that will enrich the events, validate their structure and then write validated requests only into a DynamoDB table.

Basic enriched and validation rules will be provided in the requirement section.

An IAM Role with the relevant IAM policy is required to generate temporary credentials that will allow the testing system to access both the SQS queue and the DynamoDB table.

The test will write messages with specific content to SQS and expect to find the relevant records DynamoDB.

Requirements:

- The new service shall read the messages from the SQS queue, enrich the messages by adding an additional field and write each message as an item into a DynamoDB table.

-

The SQS Queue name shall start with:

challenge-queue

-

The DynamoDB table name shall start with:

challenge-table

- All resources shall be deployed in the Ireland (eu-west-1) region

-

Temporary IAM credentials shall be provided for the test that will allow the following API actions on the relevant SQS queue and DynamoDB table in your AWS account.

sqs:SendMessage dynamodb:GetItem

-

The client application will send messages with the following structure into the SQS queue:

{"question_id" : "[Question ID]" , "question_text" : "[Question Text]"}Example message:

{"question_id" : "1234567" , "question_text" : "How to generate temporary IAM credentials?"} - The DynamoDB table shall be configured with a Partition Key only (no Range/Sort Key)

- The question_id attribute shall be configured as the Partition Key

- All attributes in the DynamoDB table shall be of type String (“question_id”, “question_text”, “user_type”)

-

The following item structure is expected in DynamoDB:

{"question_id" : "[Question ID]" , "question_text" : "[Question Text]", "user_type" : "[Random String]"}Example message:

{"question_id" : "1234567" , "question_text" : "How to generate temporary IAM credentials?", "user_type" : "A+"} - The maximum time from message ingestion into the SQS queue to the item being available in the DynamoDB table shall be 2 seconds.

Your mission, if you choose to accept it,

is to deploy an ingestion service with SQS, a custom processing component and DynamoDB.